AI Inference Server Market Supports New Technology With USD 133.2 Billion By 2034, Regional Growth at USD 9.34 Billion

The AI Inference Server Market size is expected to be worth around USD 133.2 Billion By 2034, growing at a CAGR of 18.40% during the forecast from 2025 to 2034.

NEW YORK, NY, UNITED STATES, January 23, 2025 /EINPresswire.com/ -- The rising demand for artificial intelligence (AI) applications across industries, such as healthcare, finance, and retail, plays a significant role in the expansion of this market. As organizations increasingly rely on AI for decision-making, the need for advanced AI inference servers that can handle complex workloads is rapidly growing.

Technological advancements are also crucial in shaping the market. The continuous improvements in machine learning algorithms, data processing, and hardware acceleration have enabled faster, more efficient AI inference, making these servers more attractive to enterprises. The integration of edge computing with AI inference servers is further enhancing processing power, allowing data to be processed closer to the source for faster decision-making.

Click Here to Get the Research Sample in PDF Format @ https://market.us/report/ai-inference-server-market/request-sample/

Moreover, the growing shift towards automation and digital transformation in industries is pushing the demand for AI-based solutions, fueling the market's growth. Businesses are keen to adopt AI inference servers to enhance their computational capabilities and improve efficiency, thereby propelling the market forward through the next decade. These technological innovations and business needs are expected to continue to drive the market’s expansion.

In 2024, the global AI Inference Server Market is valued at USD 24.6 billion and is projected to reach USD 133.2 billion by 2034, growing at a remarkable CAGR of 18.40%. Several key factors are driving this robust growth.

Get the Detailed Report at Exclusive Discount @ https://market.us/purchase-report/?report_id=137775

Key Statistics

Performance Metrics

Throughput: High-performance AI inference servers can process over 1,500 images per second, particularly when optimized with frameworks like TensorRT or ONNX Runtime, allowing for efficient deep learning model execution.

Latency: Optimized models can achieve inference latency as low as 5 to 10 milliseconds per request, crucial for applications requiring real-time responses, such as autonomous driving or live video analytics.

Hardware Utilization

GPU Utilization: Many AI inference servers leverage NVIDIA GPUs, with the latest A100 Tensor Core GPU providing up to 312 teraflops of AI performance for mixed-precision tasks, enabling efficient model handling.

TPU Performance: Google’s Tensor Processing Units (TPUs) can deliver up to 420 teraflops of performance, making them ideal for large-scale AI applications that demand high computational power.

Scalability and Capacity

Concurrent Requests: AI inference servers can manage thousands of concurrent requests, with some configurations supporting up to 10,000 concurrent sessions, making them suitable for high-demand environments.

Model Deployment: Many organizations deploy multiple models on a single server, capable of hosting over 50 models simultaneously without significant performance degradation.

Energy Efficiency

Performance-per-Watt: Modern servers can process up to 100 teraflops while consuming under 300 watts, which is critical for reducing operational costs and the environmental footprint of data centers.

Cooling Efficiency: Some AI inference servers can operate at temperatures above 40°C, thanks to advanced cooling technologies, ensuring sustained performance without overheating.

Market Adoption

Framework Support: About 85% of AI inference workloads are executed on popular frameworks like TensorFlow and PyTorch, reflecting their dominance in the industry.

Growth Rate: The global demand for AI inference servers is rapidly growing, with a projected CAGR of 18.40% from 2023 to 2030.

Key Takeaways

- Market Growth: The AI Inference Server market is expected to grow from USD 24.6 billion in 2024 to USD 133.2 billion by 2034, reflecting a strong CAGR of 18.40%.

- Component Breakdown: Hardware holds the largest market share, accounting for 61% of the total market.

- Deployment: The cloud-based deployment model leads, representing 55% of the market.

- Application Focus: Image recognition dominates applications, making up 40% of the market.

- Enterprise Size: Large enterprises represent the majority, holding a 65% share of the market.

- End-User Sector: The banking, financial services, and insurance (BFSI) sector is a major end-user, contributing 23% to the market.

- Geographical Distribution: North America is the leading region, capturing 38% of the global market share.

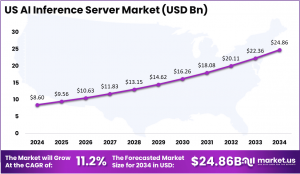

- U.S. Market Insights: The U.S. market is valued at USD 8.6 billion, with a steady CAGR of 11.2%, indicating stable growth in the region.

Request Sample Here To Get Detailed Insights @ https://market.us/report/ai-inference-server-market/request-sample/

Experts Review

Government incentives play a significant role in the growth of the AI Inference Server market. Many governments, particularly in North America and Europe, are offering funding and tax benefits to support AI research and development, creating a favorable environment for market expansion. These incentives drive investments in technological innovations, which are advancing AI inference capabilities. Breakthroughs in hardware acceleration, machine learning algorithms, and edge computing are further propelling the market.

Investment opportunities abound as demand for AI applications across industries like healthcare, finance, and automotive grows. Companies are increasingly investing in AI infrastructure, presenting opportunities for both established players and startups. However, risks remain, including high capital expenditure, the challenge of integrating AI solutions with legacy systems, and cybersecurity concerns.

Consumer awareness is rising, with businesses becoming more cognizant of the efficiency AI can bring. This has led to increased demand for AI-driven products. Technological impacts such as automation, real-time data processing, and personalized experiences are transforming industries.

The regulatory environment is evolving as governments address the ethical implications and security of AI technologies. As AI solutions become more embedded in society, regulatory bodies are crafting frameworks to ensure safe and fair usage, which will be pivotal in shaping the market’s future growth.

Report Segmentation

The AI Inference Server market is segmented across several key dimensions. By component, hardware leads the market, accounting for the largest share, with GPUs, TPUs, and custom AI chips being integral parts of AI systems. Software, including frameworks and optimization tools such as TensorFlow, ONNX Runtime, and TensorRT, also plays a significant role. By deployment, the cloud-based model dominates, favored for its scalability and cost-effectiveness, while on-premises deployment is chosen by enterprises with specific security and latency needs.

In terms of application, image recognition holds the largest share, widely used in sectors like retail, automotive, and healthcare, followed by natural language processing, which is gaining ground in AI-powered customer service applications. Regarding enterprise size, large enterprises dominate the market due to their substantial investments in AI infrastructure, though small and medium enterprises are gradually adopting AI servers as technology becomes more accessible.

Finally, by the end-user sector, the BFSI (banking, financial services, and insurance) sector is a major contributor, leveraging AI for fraud detection and customer service, alongside significant growth in the healthcare, retail, and automotive sectors. This segmentation highlights the diverse opportunities and challenges within the market, showing how different sectors and deployment models influence market dynamics.

Key Market Segments

By Component

—Hardware

—Software

—Service

By Deployment

—On-premises

—Cloud-based

By Application

—Image Recognition

—Natural Language Processing

—Video Analytics

By Enterprise Size

—Small and Medium Enterprises

—Large Enterprises

By End-User

—BFSI

—Healthcare

—Retail and E-commerce

—Media and Entertainment

—Manufacturing

—IT and Telecommunications

—Others

Drivers

The growing adoption of AI across industries is a major driver for the AI Inference Server market. As sectors like healthcare, automotive, and finance increasingly rely on AI for automation, real-time data processing, and decision-making, the demand for efficient inference servers rises. Technological advancements in machine learning algorithms, hardware acceleration (e.g., GPUs and TPUs), and edge computing are enhancing server performance and driving market growth. Additionally, the shift towards cloud-based deployments offers scalability and cost efficiency, further fueling market adoption.

Restraints

One of the key restraints is the high capital expenditure required for setting up AI inference infrastructure, which can be a barrier for small and medium enterprises. The complexity of integrating AI systems with legacy infrastructure also limits market growth, as businesses face technical challenges in ensuring smooth deployment. Additionally, concerns about data privacy and security may hinder wider adoption.

Challenges

The rapid pace of technological change presents a challenge, as businesses must continuously update their AI systems to stay competitive. Moreover, the demand for low-latency processing can strain server performance, especially in real-time applications like autonomous driving.

Opportunities

The increasing focus on edge computing offers opportunities for AI inference servers to process data closer to the source, reducing latency. Additionally, sectors like healthcare, where AI is used for diagnostics and personalized medicine, present significant growth prospects. Moreover, ongoing government incentives for AI research and development provide funding opportunities for innovation in AI infrastructure.

Get Research Report at Best Price With Exclusive Discount @ https://market.us/purchase-report/?report_id=137775

Key Player Analysis

The AI Inference Server market is dominated by a few key players that are shaping its trajectory through innovation and strategic investments. NVIDIA stands out as the market leader, offering high-performance GPUs like the A100 Tensor Core, which is critical for AI workloads. Their consistent advancements in AI-specific hardware and software optimization tools like TensorRT have set the industry standard, making them a primary choice for businesses looking to harness AI’s full potential.

Google with its Tensor Processing Units (TPUs) is another dominant player, particularly in cloud-based AI applications. Google’s specialized hardware, offering unparalleled performance, especially in deep learning tasks, positions them as a strong competitor in large-scale AI deployment.

Intel, known for its widespread hardware solutions, is making strides in the AI inference space with its Xeon processors and AI-optimized accelerators. Despite not having the specialized focus of NVIDIA or Google, their well-established presence in data centers gives them an edge in integrating AI into existing infrastructures.

Microsoft is also positioning itself as a key player through Azure’s cloud-based AI inference capabilities, leveraging both its extensive cloud infrastructure and AI expertise to deliver scalable solutions.

Top Key Players in the Market

NVIDIA Corporation

Intel Corporation

Google LLC

Microsoft Corporation

Amazon Web Services, Inc.

IBM Corporation

Advanced Micro Devices, Inc. (AMD)

Qualcomm Technologies, Inc.

Alibaba Group Holding Limited

Baidu, Inc.

Huawei Technologies Co., Ltd.

Oracle Corporation

Dell Technologies Inc.

Hewlett Packard Enterprise (HPE)

Cisco Systems, Inc.

Fujitsu Limited

Graphcore Limited

Xilinx, Inc.

Tencent Holdings Limited

Samsung Electronics Co., Ltd.

Other Key Players

Recent Developments

The AI Inference Server Market is witnessing significant growth, driven by the increasing demand for AI-powered applications across various sectors, including healthcare, finance, and automotive. North America leads the market, with a strong emphasis on cloud-based deployment models that enhance accessibility and scalability.

Key trends include advancements in hardware, particularly Graphics Processing Units (GPUs) and specialized chips designed to improve processing capabilities and support real-time data analysis. Major players such as NVIDIA and Intel are heavily investing in high-performance inference platforms to meet the rising need for low-latency predictions and efficient data processing.

Additionally, emerging applications like image recognition and natural language processing are pivotal in propelling market expansion. As businesses increasingly integrate AI inference servers into their operations, they seek to leverage AI for enhanced operational efficiency and innovation. This trend reflects a broader shift towards AI-driven solutions that enable organizations to remain competitive in a rapidly evolving technological landscape.

Conclusion

The AI Inference Server Market is poised for transformative growth as industries increasingly adopt AI technologies for real-time data processing and decision-making. With a focus on enhancing performance through advanced hardware and cloud-based solutions, companies are investing in high-performance platforms that support diverse applications, from healthcare to autonomous systems.

The ongoing integration of AI inference servers into business operations underscores their critical role in driving efficiency and innovation. As the market continues to evolve, it will be essential for organizations to leverage these technologies effectively to maintain a competitive edge in an increasingly AI-driven landscape.

Explore More Research Reports

Microprocessor and Gpu Market - https://market.us/report/microprocessor-and-gpu-market/

Identity Verification Service and Software Market - https://market.us/report/identity-verification-service-and-software-market/

Digital Battlefield Market - https://market.us/report/digital-battlefield-market/

Anti-Aircraft Warfare Market - https://market.us/report/anti-aircraft-warfare-market/

Aircraft Engine Market - https://market.us/report/aircraft-engine-market/

Electronic Shift Operations Management Solutions (eSOMS) Market - https://market.us/report/electronic-shift-operations-management-solutions-esoms-market/

Human Capital Management Market - https://market.us/report/human-capital-management-market/

AI in Data Quality Market - https://market.us/report/ai-in-data-quality-market/

AI in Teaching Market - https://market.us/report/ai-in-teaching-market/

AI In ERP Market - https://market.us/report/ai-in-erp-market/

Operational Technology (OT) Security Market - https://market.us/report/operational-technology-ot-security-market/

Lawrence John

Prudour

+91 91308 55334

Lawrence@prudour.com

Visit us on social media:

Facebook

LinkedIn

Distribution channels: Technology

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.

Submit your press release